Welcome

Latest Post

Open source is not rug pulling

About

Hi, my name is Adam Parker, also known as grab-a-byte. This here is my blog, you’ll see me around with this mascot

You can find me on the following

My First Open Source Pull Request

2019-05-30

I always thought open source would be a scary world and the start of it was. I was shown a nice little website named First Timers Only ( https://www.firsttimersonly.com/ ), and this was my entry point. This lead me to the UpForGrabs page and I started filtering by what I knew, .NET. (Yes I skipped first contributions, if I don’t know how to do a pull request after working in industry for a year, I may be in the wrong profession)

I found many projects I recognized very quickly, Chocolatey CLI, Fluent NHibernate, Fake It Easy and even Mono! All of the previously mentioned are massive, well known projects and if I’m honest, I thought I didn’t stand a chance with any of the issues found on their github repositories.

Very quickly, my want to add to open source started to dwindle due to my belief in my lack of skill. So where did my first OS PR go? It went to a small start up project call Spotify-NetStandard ( https://github.com/RoguePlanetoid/Spotify-NetStandard ). Whilst I have no immediate plans for the library, I saw a talk on this and saw parts of the API I thought could be improved and so I sat down, and worked it out.

Forking the repository was easy, making my work was also easy (well apart from the XML documentation part, I’ve not done that before) but I think the biggest and hardest step is actually submitting the PR. Many thoughts went through my head, worrying that the Library maintainer would not like the code, that I hadn’t done the documentation correctly, all manner of things. But I put it in anyway.

Getting it accepted and merged in is a massive achievement for me and is my first public commit outside of test projects and it made me feel extremely happy (think the usual kid in a candy shop simile). It also gave me an understanding as to what will drive me in future Open Source projects: doing work for features that I believe I would use. It is a big driving force as you want the feature there so you can use it in the next project or because you genuinely think it will be useful to both yourself and others. This has re-sparked my want to get further into the world of open source and work my way to bigger projects I find myself using everyday.

If you take one thing from this, it’s give it a try. If you’re scared of the big projects, go to meetups, meet other developers, they may have projects you can contribute to or even try starting your own. That’s my next stage, so wish me luck.

Thanks for reading.

Can contributing to open source be easy?

2019-06-16

A very leading question in the title and the answer is, of course! Nobody said you had to commit code to be part of an open source project, many people commit changes to documentation and this is where my second open source pull request(PR)/ commit lies. I was recently working on a project which is using the Prism Library (https://github.com/PrismLibrary), a MVVM Framework for Xamarin Forms and WPF (Windows Presentation Foundation).

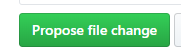

While reading the documentation to build up and add features for our application, I found a very simple typo in the documents which irked me a bit. The document simply said ” batterly” instead of “battery” which is small but also means it takes 30 seconds to fix. So this is exactly what I did. Jumped over to the repository, navigated to the document and clicked the little icon that looks like a pencil in the top right corner (image below).

I then made the change, and hit the big green propose file change button at the bottom.

And then I had to accept the .Net Foundations Contribution Licence Agreement, seems scary but it is a form which asks a few questions and basically gets your signing to say you are allowed to contribute to the work.

So, no code and yet another PR accepted and under my belt. This is also a nice way to show the documentation is being used; if not for reading this to learn about the product and how to use it for this particular project, it may have stayed unnoticed for some time.

Short and sweet this one, so thanks for reading.

Remove Cached Report Data SSRS

2019-09-05

I was working with a SQL Server Reporting Services (SSRS) Report the other day and found myself trying to work with some data changes while writing the report to ensure the report was behaving as expected. Initially, nothing on the report changed even though I had changed the data, I found this odd and thought I might’ve been pointing at the wrong database. After a bit of googling, I found that report data get cached after the first run of previewing a report.

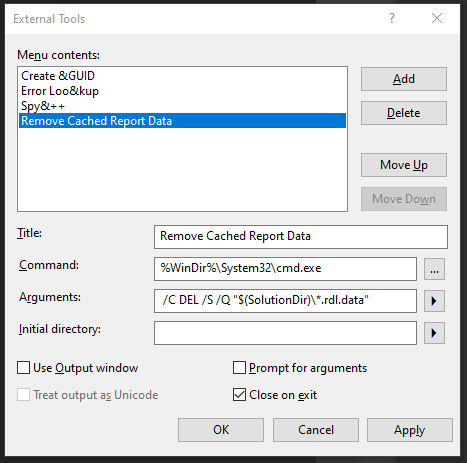

In order to force the data to update, you must delete files from within the solution directory with the extension of “.rdl.data”. I can’t remember exactly which folder this is in, however it was getting tedious going into this folder and deleting those file all the time. As such, I had some fun playing around with the “External tools” section of Visual Studio and came up with the below.

If you click on Tools->External Tools in the top menu, click add give it a name of your choosing and use the following settings:-

- Command: %WinDir%\System32\cmd.exe

- Arguments: /C DEL /S /Q “$(SolutionDir)*.rdl.data”

WARNING: ONLY RAN ON WINDOWS

All this says is open up cmd.exe (Command Prompt) and run the command placed into the arguments. In this case, it will Delete all “.rdl.data” files within the solution directory, and it won’t echo it out.

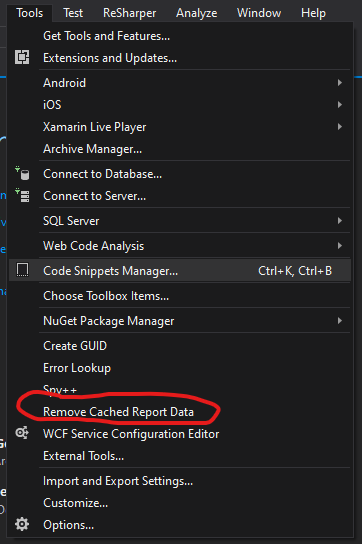

You should now have a handy button in the Tools menu with whatever you called your external tool (in my case “Remove Cached Report Data”)

Clicking this will now remove report data and you don’t have to worry about continuously jumping into the File Explorer and removing these files while testing reports, simply click this before each run and any data caching should be removed.

Hope this helps.

Xamarin Shell For Web Developers

2020-01-27

Coming from web development, I have become very used to and familiar with the MVC architecture for development. It allows for good decoupling of components and also allows for excellent use of Dependency Injection (DI). Having moved to start teaching myself mobile app development through Xamarin Forms , I found the view centric approach to navigation very odd and strange. It was tightly coupled to the framework and I spent hours trying to come up with the perfect navigation service that was generic enough to use.

Luckily for me, the team at Xamarin have done a lot of the heavy lifting with a feature introduced in Xamarin Forms 4.0 known as Xamarin Shell. This gave us a nice new way to navigate around our application using URL’s. Whilst still coupled to the framework, it makes it easier to pass parameters and allows the ability to think more similarly to the way web applications work.

In this repo, I created a simple proof of concept for the idea which I will describe below, feel free to have a look at the code along the way.

So to liken this to MVC, we will break this down into the respective parts and describe how we can transition this into the Xamarin Shell Model.

Model:- The model part of your application does not change. It is still the simple data being stored that we all know and love from web development.

View:- The view also does not change (at least on the Xamarin forms side of thing) And is a simple XAML File that your designers can play with until their hearts content. The only difference here is the navigation will be performed using Shell, however it is likely you will decouple this from the view (something I have not done in the demo app)

Controller:- The controller of a traditional MVC app is usually handled by the framework, passing in parameters and binding them for you. This is where we have to do the most work. Firstly, the Shell Navigation uses a URL to navigate to, this then fires off the following events in order :-

-

The code behind for the target page gets bound to the parameters. These are done using Attributes provided by Xamarin which look like the following

[QueryProperty(nameof(ItemId), "id")]This binds the parameter pass from the URL Navigation to a property in the code behind.

-

In the code behinds OnAppearing method, fire off a Request to get data needed fore the ViewModel. In the example project, I simply use the whole response form the Request, however this could be built up however you like. Think of this similar to making calls to services in a MVC application. I do this using Jimmy Bogard’s MediatR, which is resolved using Xamarin Forms built in Dependency Resolver in the code behind.

public ItemDetailPage() { InitializeComponent(); this.mediator = DependencyService.Resolve<IMediator>(); } -

Finally, once the calls have been made, you can create the viemodel to bind to your view which is then rendered.

Of course to achieve this, you need to set up the DependencyService from Xamarin Forms with MediatR itself in order to achieve this simplicity. This however is no different to building up your favourite Dependency Injection container in ASP.NET MVC and setting the resolver for the Dependency Service at app startup. I do this with the package Microsoft.Extensions.DependencyInjection and using the ServiceProvider class as shown in the code below

public partial class App : Application

{

public App()

{

InitializeComponent();

var serviceProvider = SetupDependencyInjection();

DependencyResolver.ResolveUsing(serviceProvider.GetService);

MainPage = new AppShell();

}

ServiceProvider SetupDependencyInjection()

{

var serviceCollection = new ServiceCollection();

serviceCollection.AddSingleton<IDataStore<Item>, MockDataStore>();

serviceCollection.AddMediatR(typeof(App).Assembly);

return serviceCollection.BuildServiceProvider();

}

}

Once this is set up, as MediatR uses Constructor Injection in its framework, all the services get resolved through constructor injection just as if it was a ASP.NET Controller and onward.

The final piece of the puzzle if for any routes which would appear outside your SHell XAML file. These include things like details pages which will need to be navigated to, but only on the press of a button and so are not part of the main App Navigation structure. For these, in the AppShell.xaml.cs file., you will need to call RegisterRoute for each page you wish to add. I set up a method I call from the constructor to do this.

public AppShell()

{

InitializeComponent();

RegisterRoutes();

}

void RegisterRoutes()

{

Routing.RegisterRoute("ItemDetailPage", typeof(ItemDetailPage));

}

Hope someone finds this useful!

Dotnet Interop Intro

2020-02-29

What is DotNet?

DotNet is a whole stack. It can get very confusing when talking about .NET and what it is. The main part which actually makes all of the following possible is the .NET runtime.

What this runtime is in simple terms, is a layer which takes the compiled code, and runs it on the machine. It managed to do this as it reads what is know as Intermediate Language (IL) and translates this to be ran natively on the machine. This combination of IL and Runtime allow for some rather interesting things, and in this series of posts, we’ll be talking about Interoperability (Interop for short).

What is Interop?

If you Google DotNet interop and open the first page, it’s likely Microsoft Documentation which states the following :-

“Interoperability enables you to preserve and take advantage of existing investments in unmanaged code. Code that runs under the control of the common language runtime (CLR) is called managed code, and code that runs outside the CLR is called unmanaged code. COM, COM+, C++ components, ActiveX components, and Microsoft Windows API are examples of unmanaged code.”

I personally find this a mouthful and confusing and as such I simply prefer to think of it as using libraries from other languages that can run in the CLR (which runs on the runtime, I never said .NET made any sense).

Future plans

So, with the above said and done. I’m here to announce a small series I will be doing on .NET Interop. I will be covering using VB, C# and F# however I have also seen this done with C++ (my C++ is far too rusty to figure it out). All posts will be using C# and the other language and will be exploring how .NET manages languages with the same programmatical paradigm (C#. VB both Object-Oriented/Imperative) and how it deals with differences in paradigm’s (C# and F#, Imperative vs Functional).

Hope you enjoy the upcoming posts!

Dotnet Interop:- C# and Visual Basic

2020-04-01

This is Part 1 in my series of Blog Posts on DotNet interop.

Why Visual Basic?

I decided to use visual basic as my first way to show Interop as C# and Visual Basic both share the same programming paradigm of object orientation.

A sample of the languages!

Below I have snippets of two classes which are mirrors of each other in both Visual Basic and C#.

Visual Basic

Public Class MyVBClass

Private Multiplier As Integer

Function AddTwo(A As Integer, B As Integer) As Integer

Return A + B

End Function

Sub SetMultiplier(NewMultiplier As Integer)

Multiplier = NewMultiplier

End Sub

Function MultiplyByMultiplier(NumToMultiply As Integer) As Integer

Return NumToMultiply * Multiplier

End Function

End Class

C#

using System;

namespace CSharpClassLib

{

public class MyCSharpClass

{

private int multiplier;

public int AddTwo(int A, int B) => A + B;

public void SetMultiplier(int newMultiplier)

=> multiplier = newMultiplier;

public int MultiplyByMutiplier(int numToMultiply)

=> numToMultiply * multiplier;

}

}

So How Do We Get Started

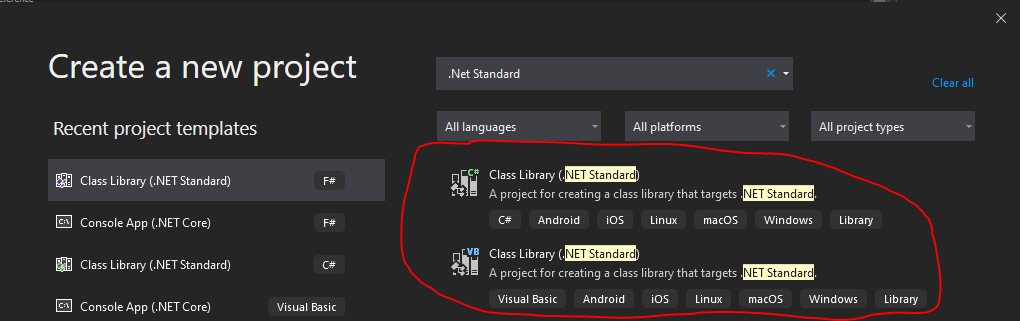

Both of the above classes were created in their relevant .NET Standard lib file from Visual Studio.

Once these have been created and compiled, you will end up with a DLL file inside of the respecting ‘bin’ folders of each project. If these are in individual Solutions and compiled completely separately, you would need to copy this file over to the project you wish to use it in (say copy the Visual Basic DLL to a C# Console App), add a reference (using brose for file), and voila! That all that’s required, you can now use the Visual Basic code just like C# code as shown in the below example.

using System;

using VBClassLib;

namespace CSharpConsole

{

class Program

{

static void Main(string[] args)

{

MyVBClass myVBClass = new MyVBClass();

var vbResult = myVBClass.AddTwo(1, 2);

Console.WriteLine(vbResult);

myVBClass.SetMultiplier(4);

var myVBResult2 = myVBClass.MultiplyByMultiplier(4);

Console.WriteLine(myVBResult2);

}

}

}

If you didn’t know any better, you may assume that you were using a standard C# library!

To prove this point further, I have also created an Example that uses the popular C# Library Newtonsoft.JSON inside of a Visual Basic Project.

Imports Newtonsoft.Json

Module Program

Sub Main(args As String())

Dim numbers = New Integer() {1, 2, 4, 8}

Dim serializeResult = JsonConvert.SerializeObject(numbers)

Console.WriteLine(serializeResult)

Console.ReadKey()

End Sub

End Module

Again, as simple as adding the NuGet Package and using it. Rather spectacular if you ask me.

What if I can’t be bothered to copy files?

There is another point to be made here, if you are writing both in the same solution, you can add both projects to a solution file and Visual Studio is clever enough to compile both separately as well as allow you to link. I can’t imagine a scenario where you would want to do this (except for demo purposes) but it is cool this feature exists.

One small caveat with the above is that if you are using the VB Class Library in the C# console project and you update the VB Class Library, you will need to rebuild it before the changes are picked up by visual studio as usable in the C# project.

I am yet to find anything in VB which cannot be used in C# but I have only used VB a small amount and have kept it simple for this example, I would love to hear if anyone has found anything which causes complications and any question, feel free to ask and I will try to answer.

Thanks for reading!

C# and F# Pt. 1

2020-04-29

This is Part 2 in my series of Blog Posts on DotNet interop.

What makes F# special?

In the Dotnet world, F# stand out as being the Functional based language. This might sound strange, and I on’t have time to go into the full difference between Function and Object-Oriented languages and paradigms here, please do a google if you wish to learn more.

Some of the main features of F# that are relevant to this series are as follows:-

- Higher Order Functions

- Modules

- Records

- Sequences

- Discriminated Unions

- Options

I put them in this order for a reason. This is the list I will be discussing and showing how the Dotnet runtime allows these two languages of different paradigms to happily (in most cases) work together.

Modules and Namespaces

Lets start with Namespaces, while F# can use namespaces, it will normally use Modules. However, if you decide to add a namespace declaration to the top of your F# file, when you reference the output library in your C# project, you will notice no difference when importing the namespace, you still use ‘using FSharp.Namespace’, or whatever you called your library.

So why bother with Modules at all? Firstly, Modules are more in line with the way F# has been built up and as such, you will find it used in most F# libraries instead, we can still use these Modules in C#.

When a Module is imported, it represents itself as a static class in C#. This allows for seamless usage between F# and C# code on both sides as in F#, we use ‘open’ statements on C# imports.

For example, this code here:

namespace FSharpClassLib

module FunctionalParadigms =

let aNumber = 5

type x = | One | Two

Can be used in a C# console app as follows:

using FSharpClassLib;

namespace CSharpConsole

{

class Program

{

static void Main(string[] args)

{

var test = FunctionalParadigms.aNumber;

}

}

}

Above, you also see an example of standalone values being used from F# in C#. again simply showing itself as a static field.

Records

In F#, “Records represent simple aggregates of named values, optionally with members. They can either be structs or reference types. They are reference types by default.”. That seems like a mouthful, and basically it means, it’s an object that holds a combination of values. In C# terms, it’s effectively a class with a bunch of properties on it. Whilst that sounds simple, something to know about F# is it uses Structural Equality and by default, it’s data structures are Immutable. For those unfamiliar with these terms, they are as follows:

- Structural Equality : A object is equal if all it’s properties match and not if it is the same reference in memory.

- Immutable : The object cannot change over time.

With these defined, it may come as no surprise that to instantiate a F# record in C#, you use an ‘All Arguments Constructor’. This guarantees known values, it also allows the Properties consumed by C# to be read only and s such, keep in line with the Immutability aspect of F#.

The Structural Equality also get implemented by default even on the C# side. So using a record made in F#, and using

var a = new Thing(1, true);

var b = new Thing(1, true);

in C#, the objects a and b are equal.

To how this in action here’s a simple F# Record

namespace FSharpClassLib

module FunctionalParadigms =

type TypeTwo = {

isTrue : bool

SomeNumber: double

}

It is used in a C# Console App as follows:

using FSharpClassLib;

using System;

namespace CSharpConsole

{

class Program

{

static void Main(string[] args)

{

var a = new FunctionalParadigms.TypeTwo(true, 1.1);

var b = new FunctionalParadigms.TypeTwo(true, 1.1);

Console.WriteLine(a.Equals(b)); // This prints True!

Console.ReadKey();

}

}

}

And when decompiled, shows the following

public sealed class TypeTwo : IEquatable<TypeTwo>, IStructuralEquatable, IComparable<TypeTwo>, IComparable, IStructuralComparable

{

public TypeTwo(bool isTrue, double someNumber);

[CompilationMapping(SourceConstructFlags.Field, 0)]

public bool isTrue { get; }

[CompilationMapping(SourceConstructFlags.Field, 1)]

public double SomeNumber { get; }

[CompilerGenerated]

public sealed override int CompareTo(TypeTwo obj);

[CompilerGenerated]

public sealed override int CompareTo(object obj);

[CompilerGenerated]

public sealed override int CompareTo(object obj, IComparer comp);

[CompilerGenerated]

public sealed override bool Equals(object obj, IEqualityComparer comp);

[CompilerGenerated]

public sealed override bool Equals(TypeTwo obj);

[CompilerGenerated]

public sealed override bool Equals(object obj);

[CompilerGenerated]

public sealed override int GetHashCode(IEqualityComparer comp);

[CompilerGenerated]

public sealed override int GetHashCode();

[CompilerGenerated]

public override string ToString();

}

Neat huh?

I think this should be enough information for now, however, in the next post we will continue exploring this interaction between the two languages and how it plays well together.

Any questions comment below or feel free to use the contact me page.

See you next time!

C# and F# Pt. 2

2020-07-03

This is Part 3 in my series of Blog Posts on DotNet interop.

Continuing on from where we left off last time, we will try to cover off the rest of F#’s features that play really well and simply when being consumed from a C# codebase.

Values

F# can have values places directly inside modules, almost ‘global’ values in the module. See an example below :-

module FunctionalParadigms

let aNumber = 5

As we know from the last post, modules expose themselves as static classes in C#. As such, this value just becomes a static readonly property of that static class. As such, C# can consume it as follows.

var num = FunctionalParadigms.aNumber;

Functions

Functions are quite core to F# and functional programming in case you hadn’t guessed. So surely they should be easy enough to consume from C#? The answer here is kind of, if you stick with using types as parameters and not pass around functions (which is normal in F#). The following example takes a string and returns its length (very trivial I know)

module FunctionalParadigms =

let aFunction (string:string) =

string.Length

It is consumed by C# as a static function on a static class :-

var strLength = FunctionalParadigms.aFunction("Thing"); // Returns 5

Sequences

F# uses a lot of sequences. It is exposed to C# using the IEnumerable interface and as such can be treated as one without any issues.

Discriminated Union

Discriminated Unions in F# are a ‘choice type’. They act in slightly different ways depending on how they are used. if we define one of them using integers, It will compile down to a C# enum type. And example is as follows :-

type LogLevels =

| Error = 1

| Warning = 2

| Info = 3

Compiles sown to C# as this :-

public enum LogLevels

{

Error = 1,

Warning = 2,

Info = 3

}

However, if we use any types within the Discriminated Union, it will create almost ‘factory functions’ for the sub-types. For Example:

type OtherLogLevels =

| Error of int

| Warning of struct(int * string)

| Info of string

Gets the following interface in C#:

public abstract class OtherLogLevels : IEquatable<OtherLogLevels>, IStructuralEquatable, IComparable<OtherLogLevels>, IComparable, IStructuralComparable

{

public bool IsInfo { get; }

public bool IsWarning { get; }

public bool IsError { get; }

public int Tag { get; }

public static OtherLogLevels NewError(int item);

public static OtherLogLevels NewInfo(string item);

public static OtherLogLevels NewWarning((int, string) item);

public sealed override int CompareTo(OtherLogLevels obj);

public sealed override int CompareTo(object obj);

public sealed override int CompareTo(object obj, IComparer comp);

public sealed override bool Equals(object obj);

public sealed override bool Equals(object obj, IEqualityComparer comp);

public sealed override bool Equals(OtherLogLevels obj);

public sealed override int GetHashCode();

public sealed override int GetHashCode(IEqualityComparer comp);

public override string ToString();

public static class Tags

{

public const int Error = 0;

public const int Warning = 1;

public const int Info = 2;

}

public class Warning : OtherLogLevels

{

public (int, string) Item { get; }

}

public class Error : OtherLogLevels

{

public int Item { get; }

}

public class Info : OtherLogLevels

{

public string Item { get; }

}

}

Unit

As F# hates the idea of null, it has a special type called unit. however, this means it effectively becomes interchangeable. If a F# function receives a unit, you will likely pass a null. And if a Unit is the return type from a F# function, in C# it interops as returning void. Simples.

Arrays

I don’t have anything clever to say here. It’s an array…

Conclusion

This bring me to the end of the “works really well” section of these blog posts. Next post will still be in the F# – C# Interop space, but will focus on thing that work… with few work-arounds.

C# and F# Pt. 3

2020-08-29

This is Part 4 in my series of Blog Posts on DotNet interop.

So over the last couple of posts, we’ve looked at what does work well in C# from F#, and most of it seems to go pretty smoothly. But there are occasions where the languages refuse to play nice with each other. That’s what we’ll be discussing in this final post.

Options

Option in F# are a way to get around the idea of null by providing two ‘sub-types’ called Some and None. Imagine trying to parse an Int from a String, if you provide a valid string such as “42”, it succeeds and the method should return Some(42), if you provided a invalid string, it may return none to indicate that it has indeed failed. An example being as follows:

let parseInt x =

match System.Int32.TryParse(x) with

| success, result when success -> Some(result)

| _ -> None

When consuming this from C#, you get a FSharpOption type (which I believe is in FSharpCore.dll) and you get access to a particular property called Value. If you succeeded and managed to return the Some type, this will have the value it succeeded in getting (42 in the above example), however if it returns None, C# interprets this as null and as such, you lose the safety that comes from using Options in F# and still will be required to insert null checks from that point forward. E.g.

var integerOption = FunctionalParadims.parseInt("42");

var val = integerOption.Value; //val is 42

var integerOption2 = FunctionalParadigms.parseInt("Blah Blah Blah"); // integerOption2 is null

var val2 = integerOption.Value //Throws null reference excpetion

F# Lists

F# has the idea of a list however, this differs from the C# type of List. This can cause confusion however the FSharpCore.dll comes to the rescue again as it allows CSharp to consume a FSharpList type. This does taint the C# code however and this is something you may wish to avoid. To avoid importing the F# DLL (or nuget package) into your project, the F# lists implement IReadOnlyCollection which is in System.Collection.Generic and as such is normal to

let getAList() = [1;2;3]

IReadOnlyCollection<int> list = FunctionalParadigms.getAList();

Discriminated Unions

I know I already said Discriminated Unions work well in the last part so why am I re-covering them? Well there is one case where DU’s don’t play nice in C# and that is if you don’t provide a type or an integer on the methods and have it ‘raw’

type Animal =

| Dog

| Cat

| Parrot

In this situation, it creates a bunch of “Tags” which you either need to switch on, if else, or some other method. The class and an example of their usage is down below.

public sealed class Animal : IEquatable<Animal>, IStructuralEquatable, IComparable<Animal>, IComparable, IStructuralComparable

{

public static class Tags

{

public const int Dog = 0;

public const int Cat = 1;

public const int Parrot = 2;

}

public int Tag { get; }

public static Animal Dog { get; }

public bool IsDog { get; }

public static Animal Cat { get; }

public bool IsCat { get; }

public static Animal Parrot { get; }

public bool IsParrot { get; }

public override string ToString();

public sealed int CompareTo(Animal obj);

public sealed int CompareTo(object obj);

public sealed int CompareTo(object obj, IComparer comp);

public sealed int GetHashCode(IEqualityComparer comp);

public sealed override int GetHashCode();

public sealed bool Equals(object obj, IEqualityComparer comp);

}

private static void SwitchingOnNoTypeDUs()

{

Animal animal = Animal.Cat;

switch (animal.Tag)

{

case Animal.Tags.Dog:

Console.WriteLine("dog");

break;

case Animal.Tags.Cat:

Console.WriteLine("cat"); // prints cat

break;

case Animal.Tags.Parrot:

Console.WriteLine("polly want a cracker");

break;

default:

throw new ArgumentOutOfRangeException();

}

}

The final part of this post is something that baffles me beyond belief but is unfortunately true. The one thing I found that doesn’t work well in the C# realms.

Functions

Yes. As a functional language, in F# you pass functions into functions and get functions returned from functions and it works a charm. Trying to pass a function from C#, not so friendly. My first thought when trying to do so was the following.

Say I have a function in F# like so :

let doAsStringFunction stringFunc =

stringFunc "Something"

Quite simple, takes in a function that takes a string and returns the result of that function on the word “Something”. I tried to call it like this:

FunctionalParadigms.doAStringFunction<int>((x) => x.Length());

Which to me seems logical but this doesn’t work. I will now present the actual solution and end this post before I start rambling beyond compare.

FunctionalParadigms.doAStringFunction(FSharpFunc<string, int>.FromConverter((x) => x.Length));

Functional Syntax Holding It Back?

While looking through many programming languages over the years, I have come to appreciate the ones which are based around the theory of functional programming. I find they are a joy to write and allow for some very expressive code in few characters. However, when looking at the popularity of many of these languages I’ve noticed their popularity seems to be low. As you may have guessed from the title, I think a large part of this is down to syntax and I’d like to try and explain why I think this may be. I’d like to preface it with it not being the only reason I think but it’s one of the primary reasons as to why this may be.

For reference, this post is purely a food for thought kind of post and is not an argument in any way.

Most Popular Languages are also the most C-Like. Coincidence?

Using the website Languish as a means of measuring popularity, the top 8 (as of writing this) are as follows:

- Python

- JavaScript

- Typescript

- Java

- C++

- C#

- Go

- C

All of this with the exception of Python (which I imagine is coming from data science and the ever growing popularity of AI) have their basis in a C-Like way of writing code (including unsurprisingly, C…).

My definition of a C-like language is one that:

- Uses braces

{}to denote scope. - Uses parenthesis

()to denote function calls. - Use of an explicit

returnkeyword. - Has type definitions used in the function signatures.

These all check at least 3 of those 4 boxes and that is enough for me to call them C-Like. If you want to take away from this that they are mostly languages that encourage the use of Object-Oriented design then you may be on the right track with this article.

A final point on these languages is that none of them will claim to have come from a functional background and while some may have some functional features in their modern incarnations it was not a thought when they first came about.

Side Note: Meta-Language (ML) Syntax

ML style languages are what I would name the opposite of C-like and they tend to have the following attributes:

- Use whitespace indentation to denote blocks.

- Heavier use on type inference to determine types (leading to less type definitions being written).

- No parenthesis to call functions, opting for a whitespace delimited list of arguments instead.

- Uses the last evaluated expression as a return without the need of a specific return keyword.

Most Popular Languages with Functional Concepts

To further the evidence that a C-like syntax is prevalent, I’d like to shift my attention to a couple of other popular languages that have been designed with some of these functional programming concepts all built in from the get-go of their design.

Scala

Scala is one of the most popular functional languages around and can be found at https://www.scala-lang.org/. I suppose I should very much specify that when I am talking about Scala I am talking about Scala versions 1 & 2 as Scala 3 has removed a lot of the syntax likeness to C that I am going to discuss. The fact that the language is still on a decline on the Languish website despite these changes only strengthens the argument I have about it.

At the time of writing this, Scala sits at position 31 on the Languish website which is quite a feat given the 507 languages the page currently tracks (even if some are not proper programming languages). That is indeed quite the accomplishment however its syntax is very similar to Java. I would imagine that a large part of this is due to the language running on the JVM and needing to interop with Java code. So adding some syntactical sugar over what Java is able to do would seem like a sensible way to bring the language to the masses of Java developers in the world.

As a prime example of how Scala can make the code more concise, we can look at the following example of a data class (Only get properties and a constructor requiring all arguments)

Java:

public class Person {

private final String firstName;

private final String lastName;

public Person(String firstName, String lastName) {

this.firstName = firstName;

this.lastName = lastName;

}

public String getFirstName() {

return firstName;

}

public String getLastName() {

return lastName;

}

}

Scala:

class Person(val firstName: String, val lastName: String)

As you can see, despite the conciseness, the syntax itself is still similar the that of the Java constructor and the C-like styling. If you would like to see more examples of this, have a Google for examples of Scala vs Java code and you can find many more examples where Scala just looks like a ‘cleaner’ Java.

Rust

Of course we need to speak about the elephant in the room here regarding a language with functional concepts built in from the start. Rust is a systems programming language that has taken the world by storm and is used in the Firefox’s JavaScript and WebAssembly engine and has made it into the Linux kernel along with Microsoft investing one million dollars into the foundation behind it. Furthermore, This currently sits in position number 10 on the language and is a roaring success of a programming language.

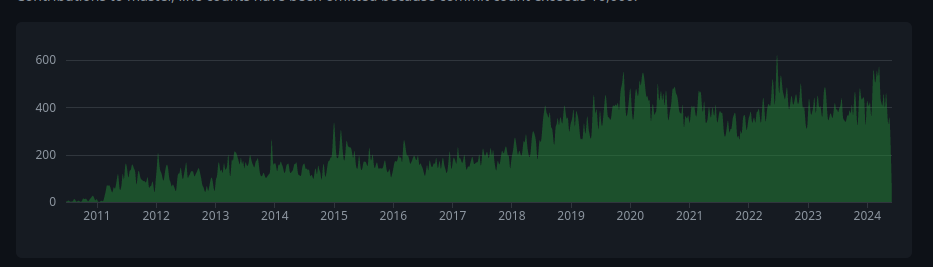

However, the compiler itself shows a telling story of how much the syntax (as well as familiarity with the language) can have a huge impact. Back in its first incarnation, the Rust compiler was written in OCaml as can be seen here in a commit from 2011, just before the compiler was re-written in Rust itself.

Using the Github metrics graphs, from 2013 onwards, we see a sharp uptick in the number of contributors to the Rust compiler as shown by this graph.

Obviously this is not all just due to the syntax and probably has a large amount to do with people who were writing rust could now contribute but I would argue its that syntax that gave them the reason to learn Rust in the first place.

So is this trend going to continue?

I fully believe this trend is going to continue down this line and will continue to go in this direction and I have a couple of language examples to prove this.

Zig

Zig is an up-and-coming language that while being in Beta, was deemed to be good enough to be used in two different fully fledged products known as Tigerbeetle and Bun.

Zig aims to be a systems level language similar to Rust listed above but with less guardrails around memory management making it closer to being able to be a drop in replacement for C. Obviously coming from that domain will lead to the language trying to replicate C in its syntax and it does with the addition of some Functional features such as:

And this is just to name a few. However the uptake of Zig seems to lean furthermore into this idea of a C-like syntax will bring more people who are willing to try the language and make it more successful.

Gleam

Gleam is a really interesting language in that it uses the Erlang BEAM Virtual Machine. Erlang is a well known functional language that has been around for many many years (since 1986!) and currently sits at position 99 on the languish website, again a fairly notable achievement given it’s functional and white-spaced nature.

The reason I mention Erlang is because Gleam is a language that had it’s Version 1.0 release on the 4th March 2024 and has skyrocketed up to position 174 in the Languish website. While that may not seem impressive, it still puts it in the top 50% of all languages tracked and it is less than double the language that was built for the platform it runs on! Not too shabby for a new language. But also if we take a look at a sample of Gleam code:

import gleam/io

pub fn main() {

io.debug(double(10))

}

fn double(a: Int) -> Int {

multiply(a, 2)

}

fn multiply(a: Int, b: Int) -> Int {

a * b

}

As you can see, while not 100% on the C-like front, we still have the parenthesis for function arguments and the braces for denoting scope. This is for a pure functional language that is running on a VM built for a functional language and we still see parts of C trying to crawl back into the syntax.

I suppose this is my final point on the matter of showing how languages continue to be driven by the syntax of C and how this syntax could be tied to the popularity of new programming languages.

Not to say “ML” inspired languages aren’t used

I should probably reiterate that non-C-like languages do 100% have a place in the world and can be very useful for particular scenarios, right tool for the job and all that. Furthermore, I’d be amiss to not point out that the benefits functional languages can provide have made their cases shown in the form of some very large companies using these non-C-like languages and having great success with it. Examples of this include:

- Twitter uses Scala in it’s “Algorithm” (https://github.com/twitter/the-algorithm)

- Meta uses Haskell to filter out spam (https://engineering.fb.com/2015/06/26/security/fighting-spam-with-haskell/)

- Jane Street use OCaml for …well everything… (https://blog.janestreet.com/why-ocaml/)

Final tidbit

As a final tidbit of information, I think the reason for the above is due to the rise of Object-Oriented programming and it’s tendency to be C-like with a lot of them being C derivatives. Functional programming is less popular and for more on that topic I would point you to this great talk be Richard Feldman, the creator of the Roc programming language (another language with a non-C-like syntax). https://youtu.be/QyJZzq0v7Z4?si=nIQjntdT_2Rvshsx - Richard Feldman - Why isn’t functional programming the norm.

Thank you for reading, any comments or questions please reach out to me on my Github.

Gameboy ROM disassembler (Zig vs Go)

2025-06-19

Why bother?

I know this may seem like a strange title to start the blog post off with but I thought I would like to explain why I thought this could be an interesting concept.

Zig first and foremost is essentially trying to be a modernised C. After years of learning how not to do C and how to do C, Zig looks to be trying to keep C’s simplicity and amount of control with some more modern features in it’s type system and tooling.

Go on the other hand is a programming language with a garbage collector and less control over the hardware in the end. It is again designed to be simple and easy to learn and work in and involves some of the people who were involved with making C.

So given these two viewpoints, I thought it would be interesting to see how the two compared as they are both smaller languages with a C based background, just one has a garbage collector and less control and the other closer to C’s inherent need to understand the hardware. Does having the garbage collector and so forth actually make much of a difference to speed, binary size and other factors.

To do this, I create a Gameboy ROM disassembler using the information at PanDocs and the repo can be found on my Github under the ‘gameboy’ repository.

Zig

Pros

Error Handling

I found the error handling in Zig to be amazingly simple to read. If you want to make a function return an error value, just put a ‘!’ in front of the return type. All error that can be returned are automatically combined to make a set that guarantees you handle all cases of that when you work with it. Handling these errors on the other side is also very easy to read by using either the ‘try’ keyword to just pass the error back up the call chain (similar to the ‘?’ operator in Rust) or by using the catch keyword to set a default value/ match on the error.

Here’s an example stolen from the unofficial zig book.

fn print_name() !void {

const stdout = std.getStdOut().writer();

try stdout.print("My name is Pedro!", .{});

}

Another point that I didn’t use in this project is you can use a ‘if’ statement with returned error unions and if you receive an error type in response, you will immediately jump to the else branch where you can switch on the returned error tpe to return a default value and ensure you handle all cases.

Optionals

While no optionals remained in my final working project I found myself happy with how they operated in terms of ergonomics as a developer. Similar to the Errors, this is denoted by simply putting a ‘?’ before the return type to denote it as being optional. With this, you can then use the ‘orelse’ keyword to give a default if it is null. Again, these also work with if expressions to allow making behaviour based on if a value is defined or not.

Here are a couple of examples of Zig’s optionals again stolen unashamedly from the unofficial zig book:

const num: ?i32 = 5;

if (num) |not_null_num| {

try stdout.print("{d}\n", .{not_null_num});

}

const x: ?i32 = null;

const dbl = (x orelse 15) * 2;

try stdout.print("{d}\n", .{dbl});

Allocators

While not being able to fully appreciate the allocator model given this project and the minimal amount of use of allocators, I found the idea to be very interesting. Giving the developer control over where memory is allocated and how it is allocated along with also providing some helpful test allocators to ensure no memory leaks are expected. I can see this being a great experience for someone who needs/wants to worry about these sorts of things (e.g. embedded devices) and it also gives me a better appreciation for when memory is allocated as opposed to other languages that hide this fact from you.

Exhaustiveness

This is one of my favourite features in Zig, for switch expressions they are exhaustive so in my implementations of the project, as the Gameboy has a full description for instructions, it ensured I had hit every instruction to be disassembled which also caught some mistakes I had made while porting over from the Go implementation (which I did first).

Cons

Strings

So in Zig, strings do not really exist as a type. They are represented as being a Array or Slice of bytes (u8 in zig terms). While this is fine and I could happily get along wit this model, one part of it always annoys me. ‘const’. That’s it, this one keyword when working with strings drove me up the wall as I was never really able to fully determine if something needed to be a ‘[]u8’, a ‘[]const u8’ or something else. §

pub fn main() !void {

Example("ABCD")

}

pub fn Example(input: []u8) void {

std.debug.print("{}", .{input});

}

This to me looks like it should work but it doesn’t as the Example function should take a ‘[]const u8’. To this day, I still don’t fully understand handling strings in Zig.

Tooling

While I understand that Zig is pre 1.0 and is still a work in progress, the tooling around it seems to be very lacklustre. No in-editor errors for anything that is not a syntax error can be forgotten about and you will be back and forth to your terminal to rebuild and find out what you’ve done wrong. This is not a great developer experience loop and there is not ‘watch’ mode in order to keep this build running and see the errors you have made (though I believe that the master branch has experimental support for this).

Given other languages I’ve used that are also quite young (take Gleam for example) and it has given me a want to have better tooling upfront for a language. Perhaps this will be the case once the language is more stable with breaking changes happening all the time but it definitely makes it difficult to recommend except for enthusiasts (though many real-world projects use it such as the Ghostty terminal and TigerBeetle Database).

Go

Pros

Tooling

Go’s tooling is sublime. I don’t think there’s any other way to put it. Using basically any IDE they are all able to identify and use ‘gopls’ (the go language server) and the ‘go fmt’ command is built right in to the binary. No additional things needed. In VS Code especially, you are prompted to download and install all the relevant tools when you first install the plugin if you do not have them installed already.

Simplicity

Go is a very simple language to learn. It reminds me of C, not much in there but it works and yes you can still shoot yourself in the foot with it. However, I think this also makes the Go code really easy to read and understand. No inheritance, No polymorphism, until recently there was even no Generics (and I also haven’t used them in my implementations).

For me coming from a C# Web Dev background, this feels very much like a breath of fresh air and forces me to think more about the problem instead of how I can make it as generic as possible.

Cons

Non mandatory error handling

This one bit me a few times. Unlike Zig, go’s errors are returned as a second value. This makes it great for writing scripts where you just want to throw away the error, however when trying to ensure you are building a proper system, it makes it to easy for you to think you know better than the compiler.

I had many a time where I was certain I was not going to have an error and yet on many occasions, I found myself wondering why I was getting incorrect input and it was due to not handling the error and accidentally using a zero value (Go usually give you and error and a zero value return when using functions that can error)

Here is an example:

func CanError() int, error {

if rand.IntN(100) < 50 {

return 0, fmt.Errorf('Sorry no')

}

return 42, nil

}

//Capturing the error

meaningOfLife, err := CanError()

if err != nil {

//Do something

}

// _ discards error and now I have no way to check it

defaultValue, _ := CanError()

Statements over expressions

This may just be me being to used to the languages that have expressions for many types of language constructs (e.g. ‘for’ in Scala, match in F# and Rust, Even ‘switch’ in Zig). This however for me was frustrating. Having to go back to declaring a variable before doing a switch and ensuring it is set before returning it.

Given Go’s target at being a simple language, it seems odd to me that they have both a ‘switch’ and an ‘if’ as I feel the if handles all the cases (albeit not the most visually appealing code). This is a small part but was a bit of a gripe for me.

Exhaustiveness

There is none, enough said, I wish they had it for cases like switch over a integer or other simple manners. Given Go emphasised the use of the ‘errors as values’ for one of it’s key selling points, seems odd to have missed something that could be a massive save for developers dealing with larger switch statements.

For Example, I would expect the following to yield a warning or an error:

x := 42

switch x {

1 => fmt.Println("The one and only")

}

//No error and I've not caught a case it should have

Another reason this annoys me is in Go, you do an ‘enum’ by just creating a list of ‘iota’ values which are just integers and I cannot prove I have handled all cases that can be valid.

Comparisons

Binary Type (static vs dynamically linked)

As far as I am aware, both languages produce static binaries. I know there are cases where go will not create a static binary however I also believe these are few and far between. To ensure this is not the case, you can disable any CGO using ‘CGO_ENABLED=0’ before a go build command (at least on MacOS and Linux, I am uncertain on windows but you can look this up if you are on Windows)

Binary Size

| Language | Bytes | Mb |

|---|---|---|

| Go | 2,409,472 | 2.4 |

| Zig | 814,776 | 0.8 |

Go does have further optimisations you can do such as stripping out debug symbols however this only took the binary size down to 1.6Mb (using flags -s -w)

The difference here is both surprising and not surprising.

As we have determined above, they are both static binaries which include everything required to make it work. So in the case of Zig, you get… well you get just the binary however with Go, we have an entire runtime that we need to consider. Yes it can strip out all of the standard library we don’t use but there is still a garbage collector to consider.

The main part that does surprise me is the sheer size difference here, Zig is less than 1/3 the size of the Go program which for space constrained environments (microcontrollers etc) would be very beneficial. However I think that Go’s 2.5Mb is still a very small binary all things considered (at least to my very limited view at binary sizes over my years of development)

Execution Time and usage

Timings

| Language | Total Time | CPU %age |

|---|---|---|

| Go | 0.228s | 20 |

| Zig | 0.180s | 94 |

Raw time command outputs (aligned)

- GoLang : ./gogb 0.04s user 0.01s system 20% cpu 0.228 total

- Zig : ./ziggb 0.06s user 0.11s system 94% cpu 0.180 total

These benchmarks were done on a MacBook with an M4 Pro chip.

So this here does surprise me the most. The CPU usage on both seem very high for what the program is doing however this could be some optimisations kicking in or it could’ve been just be some overhead from reading in the file, I’m uncertain. If anyone has any idea how I can do a better job digging into this for my own understanding also, please let me know as these numbers do surprise me.

Still, ignoring the CPU usage, we see that Go (at 0.228 seconds) is around 20% slower than Zig (at 0.180). Again not too surprising given the garbage collector and I also give props to both languages for being this fast.

It is possible this difference could be a game breaker and could get larger as a program grows, however I think that for smaller programs, you should not be making the language choice based on speed.

Outro

I’m not going to make any sweeping conclusions about which is better, they each have their place in the world and they are both good for different reason. Yes I have a preference and so probably would anyone who had a chance to experience both languages and I encourage you to go and have a play with them and hopefully you find things that are similarly interesting to you to compare and if not, at leas you tried something new.

Thanks for reading and i’ll see you in the next one.

Challenges

Below you will find a list of programming challenges. Feel free to give each of these a shot yourself or just read the post for the solutions and ideas for it.

Int Parser Challenge

2025-07-20

The Challenge

The challenge idea is simple. You get a string (something like “123”) and from that you must return a 32 bit integer. You can use everything in the language of your choosing except the built-in Int.Parse (or equivalent) method.

To clarify, it must match as expected: just converting to any old integer won’t do, e.g. “123” -> 123 and “427” -> 427. This means you can’t implement it as input.length or as my wonderful wife craftily found in Scala:

input.compareTo("123")

How you choose to handle errors is entirely up to you, you can return a null or optional value, you can throw an exception, you can return an error type, the choice is entirely yours.

Before starting to talk about the solution, I will challenge you to give this short coding problem a go yourself before reading on to the solution. This post is formatted in steps to explain the solution; so if you are uncertain of where to start, you should be able to read the next section and hopefully it will provide a hint as to the next step you are able to take.

All the code examples (up until the last one) are written in the Dart Programming language which can be found at https://dart.dev/

Characters are Integers

The first thing to know to be able to work along with this problem is to know about ASCII. The American Standard Code for Information Interchange (or ASCII) is a encoding that was created in 1963 and is the builing block of what we will talk about today. For reference while most computers will use more modern text encoding formats such as UTF-8 or UTF-16, both of these have the same starting encoding as ASCII making them backwards compatible. We will use ASCII in this post as it is a smaller subset and easier to deal with.

Below is a subset of the full ASCII table, you can see the full table at the bottom of the post under the Appendix.

| Decimal | Character | Description |

|---|---|---|

| 46 | . | Period |

| 47 | / | Slash |

| 48 | 0 | Digit zero |

| 49 | 1 | Digit one |

| 50 | 2 | Digit two |

| 51 | 3 | Digit three |

| 52 | 4 | Digit four |

| 53 | 5 | Digit five |

| 54 | 6 | Digit six |

| 55 | 7 | Digit seven |

| 56 | 8 | Digit eight |

| 57 | 9 | Digit nine |

| 58 | : | Colon |

| 59 | ; | Semicolon |

| 60 | < | Less-than sign |

From this table, note that the digit characters in the string are actually represented in memory as the integers 48 to 57. That means to be able to get the integer values of the actual character, we can subtract 48 from the character to get the values.

void main() {

const strInt = "842";

final values = strInt.codeUnits;

print(values);

final mappedValues = values.map((v) => v-48);

print(mappedValues);

}

// OUTPUT:

// [56, 52, 50]

// (8, 4, 2)

While the codeUnits property of strings in Dart returns UTF-16 code units, as the start of this encoding and ASCII are the same, it works for our example.

You can put the above code into dartpad.dev to have a play around and improve your understanding of this section.

To move left is to multiply

So now we have the individual digits as integers, we need to figure out how to be able to turn that into a number that we can actually use at the right place in our final integer. By this I mean if we take the example of “123”, we have the numbers 1, 2, and 3 individually but not 100 or 20 which is what we really need. So we need to figure out how to make each of these turn into the correct number.

If we need to make 2 turn into 20, we can multiply by 10, to make 1 turn into 100 we can multiply by 100. You may start to see a pattern here. The number we need to make is always some multiple of 10; 10, 100, 1000 etc. Something oddly useful in this is that the powers of 10 link to the exact numbers we need. For example

- 10 ^ 0 = 1

- 10 ^ 1 = 10

- 10 ^ 2 = 100

Seems rather handy.

So if we were to table the index of our numbers with this we see a problem

| Index | Number | 10 ^ index | Resulting |

|---|---|---|---|

| 0 | 1 | 1 | 1 |

| 1 | 2 | 10 | 20 |

| 2 | 3 | 100 | 300 |

Immediately we see that our 300 is higher than the actual number we are trying to get (123) so this clearly isn’t right. Have a think about how this could be changed to be correct before reading the next part.

The answer is to reverse our numbers before indexing them, doing so results in the following:

| Index | Number | 10 ^ index | Resulting |

|---|---|---|---|

| 0 | 3 | 1 | 3 |

| 1 | 2 | 10 | 20 |

| 2 | 1 | 100 | 100 |

Now none of these numbers are over our expected amount.

A code example for getting these numbers could look like the following:

import 'dart:math';

void main() {

const intValues = [1,2,3];

List<int> mapped = [];

for(int i = 0; i < intValues.length; i++){

// The '-1' is to stop the off by 1 error while indexing

final value = intValues[intValues.length - i - 1];

final multiplier = pow(10, i);

mapped.add((value * multiplier) as int);

}

print(mapped);

}

// OUTPUT:

// [3, 20, 100]

You can place the code code into dartpad.dev to have a play around with it.

Putting it all together

Finally, we need to put them all together to find our actual final number. In the case of our example, we can simply add them all together into a final variable. This can be done with a for loop or a more functional concept (e.g. fold). An example of this code would be:

void main() {

const intValues = [3, 20, 100];

var result = 0;

for (var value in intValues){

result += value;

}

print(result);

print(result.runtimeType);

}

// OUTPUT:

// 123

// int

Putting all of these steps together gives you the following program (variable names may be different from examples).

import 'dart:math';

void main() {

String number = "123";

//Section 1: Characters are Integers

var numbers = number.codeUnits.map((v) => v - 48).toList();

List<int> values = [];

//Section 2: To move left is to multiply

for(int i = 0; i < numbers.length; i++){

// The '-1' is to stop the off by 1 error while indexing

final value = numbers[numbers.length - i - 1];

final multiplier = pow(10, i);

values.add((value * multiplier) as int);

}

//Part 3: Putting it all together

var result = 0;

for(var value in values){

result += value;

}

print(result);

print(result.runtimeType)

}

// OUTPUT:

// 123

// int

I have added comments that link to each section so that the full implementation can be linked back to the parts of this post.

And just like that we have solved our problem. Now to be fair this is how the code ended up by breaking it down and discovering each step. When taking this challenge on myself, the code I produced was different and is in the next section with more explanation, but we do have a working function that will convert a string to an integer without using the built in parsing method.

Final Code

Dart

In Dart, I made 2 versions, a naive version (the function named unsafeParseInt) which assumes we are being passed a valid input as a string. The second does checks to ensure they are all numbers and if it encounters anything not in that range, it returns null instead. This was my chosen error handling method as I also set myself the further challenge of not allowing myself to assign a variable directly and do it all with method chaining. It makes the code much harder to read but was a fun code golf challenge to myself.

const AsciiZero = 48;

const AsciiNine = 57;

int unsafeParseInt(String input) =>

input.codeUnits.map((x) => x - AsciiZero).fold(0, (a, b) => (a * 10) + b);

int? safeParseInt(String input) => input.codeUnits

.map((x) => (x < AsciiZero || x > AsciiNine) ? null : (x - AsciiZero))

.fold(0, (acc, value) => value == null ? null : (acc! * 10) + (value));

void main() {

final value = unsafeParseInt("123");

print(value);

print(value.runtimeType);

}

Some questions you could ask yourself on this could be:

- What other restrictions could I add to make this more difficult?

- Could I implement it in another language?

- What better ways of handling errors could there be?

- Should I handle negative numbers?

I asked myself some of these and that lead me to the second implementation below.

Zig

For this part, I took questions 2, 3 and 4 from the above and chose to implement it in a language that supports native errors as values. The language I chose was Zig (apologies on the syntax highlighting, it is not supported in my site generator of choice). Here you can see how not limiting yourself allows you to be more explicit with the errors to state exactly what went wrong. In this example, I also added support for negative integers (starting with a ‘-’ ) which the Dart version did not.

const std = @import("std");

const expect = std.testing.expect;

pub fn main() !void {

const v = try parseInt("-2147483648");

std.debug.print("Main: {}", .{v});

}

const maximumInt32 = std.math.maxInt(i32);

fn parseInt(input: ?[]const u8) !i32 {

if (input == null) return error.NullInput;

const str = std.mem.trim(u8, input.?, " \n\r");

if (str.len == 0)

return error.EmptyParameter;

var index: usize = 0;

//Determine if negative or positive

const direction: i2 = switch (str[0]) {

'+' => 1,

'-' => -1,

else => 1,

};

//Skip the starting char if it is '+' or '-'

if (str[0] == '+' or str[0] == '-') {

index += 1;

}

//Using a u32 to allow for going over max i32 value

var result: u32 = 0;

for (str[index..]) |char| {

if (char >= '0' and char <= '9') {

result *= 10;

//Zig allows substracting of characters as they are all u8's

result += (char - '0');

} else {

return error.InvalidCharacter;

}

}

//Determine if number is too large to go in int32

if (result > maximumInt32) {

return switch (direction) {

1 => error.ValueTooHigh,

-1 => error.ValueTooLow,

else => unreachable,

};

}

//Some type shenanigans to make compiler happy

const signedResult: i32 = @intCast(result);

//Multiply by direction to give a negative number if started with '-'

const finalResult = signedResult * direction;

return finalResult;

}

Many comments have been added to the above code to help with following along for those both unfamiliar to the problem space and to Zig.

You can find the test suite for both of these in the appendix.

Conclusion

I hope you have enjoyed reading this and I also hope you gave this challenge a try yourself before reading. If you did, feel free to share your solution with me by leaving a comment on my gist available here.

One more thing!

If you enjoyed this post or you’d like to see either a more Test Driven (TDD) approach or just see the same problem addressed in C#, you may enjoy this post, Parse Integers Manually, from my friend over at Illumonos.

(If you came from there, thanks for checking out my post also, apologies for the looping links but feel free to read Illumonos’s post again!)

Appendix

Dart Final Code Tests

import 'package:test/test.dart';

import 'main.dart';

void main() {

group("unsafe parse int", () {

final testCases = [

("1", 1),

("123", 123),

];

testCases.forEach((testCase) {

test("Given '${testCase.$1}' with type ${testCase.$1.runtimeType}, returns '${testCase.$2}' with type ${testCase.$2.runtimeType}", () {

var result = unsafeParseInt(testCase.$1);

expect(result, testCase.$2);

});

});

});

group("safe parse int", () {

final testCases = [

("123", 123),

("101", 101),

("1", 1),

(" ", null),

("\n", null),

("abc", null)

];

testCases.forEach((testCase) {

test("Given '${testCase.$1}' with type ${testCase.$1.runtimeType}, returns '${testCase.$2}' with type ${testCase.$2.runtimeType},", () {

var result = safeParseInt(testCase.$1);

expect(result, testCase.$2);

});

});

});

}

Zig Final Code Tests

test "parses successfully" {

const result = try parseInt("1");

try expect(result == 1);

const result2 = try parseInt("123");

try expect(result2 == 123);

const result3 = try parseInt(" 456");

try expect(result3 == 456);

const result4 = try parseInt("457 ");

try expect(result4 == 457);

const result5 = try parseInt(" 458 ");

try expect(result5 == 458);

const result6 = try parseInt("-789");

try expect(result6 == -789);

const result7 = try parseInt("+42");

try expect(result7 == 42);

const result8 = try parseInt("0");

try expect(result8 == 0);

const result9 = try parseInt("00");

try expect(result9 == 0);

}

test "parses unsuccessfully" {

_ = parseInt(null) catch |err| switch (err) {

error.NullInput => try expect(true),

else => try expect(false),

};

_ = parseInt("") catch |err| switch (err) {

error.EmptyParameter => try expect(true),

else => try expect(false),

};

_ = parseInt(" ") catch |err| switch (err) {

error.EmptyParameter => try expect(true),

else => try expect(false),

};

_ = parseInt("abc") catch |err| switch (err) {

error.InvalidCharacter => try expect(true),

else => try expect(false),

};

_ = parseInt("12a3") catch |err| switch (err) {

error.InvalidCharacter => try expect(true),

else => try expect(false),

};

_ = parseInt("--123") catch |err| switch (err) {

error.InvalidCharacter => try expect(true),

else => try expect(false),

};

_ = parseInt("2147483648") catch |err| switch (err) {

error.ValueTooHigh => try expect(true),

else => try expect(false),

};

_ = parseInt("-2147483648") catch |err| switch (err) {

error.ValueTooLow => try expect(true),

else => try expect(false),

};

}

Full Ascii Table

| Decimal | Character | Description |

|---|---|---|

| 0 | NUL | Null character |

| 1 | SOH | Start of Header |

| 2 | STX | Start of Text |

| 3 | ETX | End of Text |

| 4 | EOT | End of Transmission |

| 5 | ENQ | Enquiry |

| 6 | ACK | Acknowledge |

| 7 | BEL | Bell |

| 8 | BS | Backspace |

| 9 | TAB | Horizontal Tab |

| 10 | LF | Line Feed |

| 11 | VT | Vertical Tab |

| 12 | FF | Form Feed |

| 13 | CR | Carriage Return |

| 14 | SO | Shift Out |

| 15 | SI | Shift In |

| 16–31 | Control characters | |

| 32 | SPACE | Space |

| 33 | ! | Exclamation mark |

| 34 | “ | Double quote |

| 35 | # | Hash sign |

| 36 | $ | Dollar sign |

| 37 | % | Percent sign |

| 38 | & | Ampersand |

| 39 | ’ | Single quote |

| 40 | ( | Left parenthesis |

| 41 | ) | Right parenthesis |

| 42 | * | Asterisk |

| 43 | + | Plus sign |

| 44 | , | Comma |

| 45 | - | Hyphen |

| 46 | . | Period |

| 47 | / | Slash |

| 48 | 0 | Digit zero |

| 49 | 1 | Digit one |

| 50 | 2 | Digit two |

| 51 | 3 | Digit three |

| 52 | 4 | Digit four |

| 53 | 5 | Digit five |

| 54 | 6 | Digit six |

| 55 | 7 | Digit seven |

| 56 | 8 | Digit eight |

| 57 | 9 | Digit nine |

| 58 | : | Colon |

| 59 | ; | Semicolon |

| 60 | < | Less-than sign |

| 61 | = | Equals sign |

| 62 | > | Greater-than sign |

| 63 | ? | Question mark |

| 64 | @ | At sign |

| 65 | A | Uppercase A |

| 66 | B | Uppercase B |

| 67 | C | Uppercase C |

| 68 | D | Uppercase D |

| 69 | E | Uppercase E |

| 70 | F | Uppercase F |

| 71 | G | Uppercase G |

| 72 | H | Uppercase H |

| 73 | I | Uppercase I |

| 74 | J | Uppercase J |

| 75 | K | Uppercase K |

| 76 | L | Uppercase L |

| 77 | M | Uppercase M |

| 78 | N | Uppercase N |

| 79 | O | Uppercase O |

| 80 | P | Uppercase P |

| 81 | Q | Uppercase Q |

| 82 | R | Uppercase R |

| 83 | S | Uppercase S |

| 84 | T | Uppercase T |

| 85 | U | Uppercase U |

| 86 | V | Uppercase V |

| 87 | W | Uppercase W |

| 88 | X | Uppercase X |

| 89 | Y | Uppercase Y |

| 90 | Z | Uppercase Z |

| 91 | [ | Left square bracket |

| 92 | \ | Backslash |

| 93 | ] | Right square bracket |

| 94 | ^ | Caret |

| 95 | _ | Underscore |

| 96 | ` | Grave accent |

| 97 | a | Lowercase a |

| 98 | b | Lowercase b |

| 99 | c | Lowercase c |

| 100 | d | Lowercase d |

| 101 | e | Lowercase e |

| 102 | f | Lowercase f |

| 103 | g | Lowercase g |

| 104 | h | Lowercase h |

| 105 | i | Lowercase i |

| 106 | j | Lowercase j |

| 107 | k | Lowercase k |

| 108 | l | Lowercase l |

| 109 | m | Lowercase m |

| 110 | n | Lowercase n |

| 111 | o | Lowercase o |

| 112 | p | Lowercase p |

| 113 | q | Lowercase q |

| 114 | r | Lowercase r |

| 115 | s | Lowercase s |

| 116 | t | Lowercase t |

| 117 | u | Lowercase u |

| 118 | v | Lowercase v |

| 119 | w | Lowercase w |

| 120 | x | Lowercase x |

| 121 | y | Lowercase y |

| 122 | z | Lowercase z |

| 123 | { | Left curly brace |

| 124 | | | Vertical bar |

| 125 | } | Right curly brace |

| 126 | ~ | Tilde |

| 127 | DEL | Delete |

Morse Code Translation

16/7/2025

It’s time for another short programming challenge. As usual, I’ll be using a different language for this one and showcasing some of its features. I’ll also give the outline of the challenge and allow you to give it a shot yourself before I explain how I went about it.

These challenges have been a fun little brain teaser for me and have been a nice departure from my day-to-day job, helping to keep the old mind alive. I hope you find the same thing for yourself.

Anything in italics is a good stopping point for you to have a think about the challenge for yourself and maybe try the next part by copying the snippets in the post to that point.

Challenge constraints

If you do not wish to partake in the challenge and you just want to read about the solution (written in Go), then please feel free to jump to the next header named Solution

You will be given a string as input. You must determine if the string is a Morse code input (e.g. “.- .—”) or an alphanumeric input (e.g. “Hello World”). From there, you must translate to the opposite type and output a new string. The encoding is as follows in both directions:

| Character | Morse |

|---|---|

| A | .- |

| B | -… |

| C | -.-. |

| D | -.. |

| E | . |

| F | ..-. |

| G | –. |

| H | …. |

| I | .. |

| J | .— |

| K | -.- |

| L | .-.. |

| M | – |

| N | -. |

| O | — |

| P | .–. |

| Q | –.- |

| R | .-. |

| S | … |

| T | - |

| U | ..- |

| V | …- |

| W | .– |

| X | -..- |

| Y | -.– |

| Z | –.. |

| 0 | —– |

| 1 | .–– |

| 2 | ..— |

| 3 | …– |

| 4 | ….- |

| 5 | ….. |

| 6 | -…. |

| 7 | –… |

| 8 | —.. |

| 9 | ––. |

| ’ ’ | / |

There are also the following test cases, which can be used to check your implementation:

Morse to Text test cases

| Input | Output | Expected Error |

|---|---|---|

| ./ | “A” | None |

| -… | “B” | None |

| -.. | “D” | None |

| . | “E” | None |

| .–– | “1” | None |

| …. . .-.. .-.. — / .– — .-. .-.. -.. | “Hello World” | None |

| “ “ (Two spaces) | None | Error, double spaces not allowed |

| ..-..-..-..-..-.. | None | Error, invalid morse character |

| .- .- A | None | Error, invalid character (A) |

| _ (Underscore) | None | Error, invalid morse character |

| — (May need to copy string as it is a character that looks like a -) | None | Error, invalid Morse character |

| − (May need to copy string as it is a character that looks like a -) | None | Error, invalid Morse character |

When encoding to a string, the output should be all uppercase (due to Morse’s case insensitivity)

Text to Morse test cases

| Input | Output | Expected Error |

|---|---|---|

| A | .- | None |

| B | -… | None |

| D | -.. | None |

| E | . | None |

| 1 | .–– | None |

| Hello World | …. . .-.. .-.. — / .– — .-. .-.. -.. | None |

| “ “ (Two spaces) | None | Error, double spaces not allowed |

| ..-..-..-..-..-.. | None | Error, invalid morse character |

| “A sentence.” | None | Error, invalid character (.) |

| “@#$%” | None | Error, invalid characters (@#$%) |

| _ (Underscore) | None | Error, invalid morse character |

| — (May need to copy string as it is a character that looks like a -) | None | Error, invalid Morse character |

| − (May need to copy string as it is a character that looks like a -) | None | Error, invalid Morse character |

When encoding as Morse, all letters will be separated by a space, and all words will be encoded as ‘/’. Also, Morse is case-insensitive, so you should be able to use both upper and lowercase characters for the message.

Solution

So now we have the test cases and the parameters set, let’s get on with the implementation! At any point, you can check out the full code for this at my Github repo for this challenge.

Getting the translations in place

So first and foremost, we need to have some way to get these translations and store them in the application. Immediately, my first thought is a Golang map (many languages call them different things, such as Dictionary, but Go calls them maps!). But I also don’t want to make a duplicate copy of these translations, where adding a new character means remembering to add it in both places. Ok, so I can loop over some key value pairs and put them into 2 dictionaries, one for encoding, and one for decoding. So what is the best way to go about this? It can’t be done statically, or can it?

No, at least not in Go sadly! We can however use something that is very close and while not static, does allow us to initialize variables at runtime based on some logic without us calling it.

In Go, there is this magic function called ‘init’ which, when in a module, gets ran as soon as the module is loaded. This would allow us to do some dynamic processing, but without us having to explicitly call it, and make it invisible to any users of the library. So let’s look at this.

var encryptMap map[rune]MorseCode = make(map[rune]MorseCode)

var decryptMap map[MorseCode]rune = make(map[MorseCode]rune)

func init() {

codes := map[rune]MorseCode{

'A': ".-",

'B': "-...",

'C': "-.-.",

'D': "-..",

'E': ".",

'F': "..-.",

'G': "--.",

'H': "....",

'I': "..",

'J': ".---",

'K': "-.-",

'L': ".-..",

'M': "--",

'N': "-.",

'O': "---",

'P': ".--.",

'Q': "--.-",

'R': ".-.",

'S': "...",

'T': "-",

'U': "..-",

'V': "...-",

'W': ".--",

'X': "-..-",

'Y': "-.--",

'Z': "--..",

'0': "-----",

'1': ".----",

'2': "..---",

'3': "...--",

'4': "....-",

'5': ".....",

'6': "-....",

'7': "--...",

'8': "---..",

'9': "----.",

' ': "/",

}

for c, v := range codes {

encryptMap[c] = v

decryptMap[v] = c

}

}

For reference, in Go, a character is called a rune so you can substitute the word rune with character if that helps with reading the code samples.